DNC RECOMMENDATIONS FOR COMBATING ONLINE MISINFORMATION

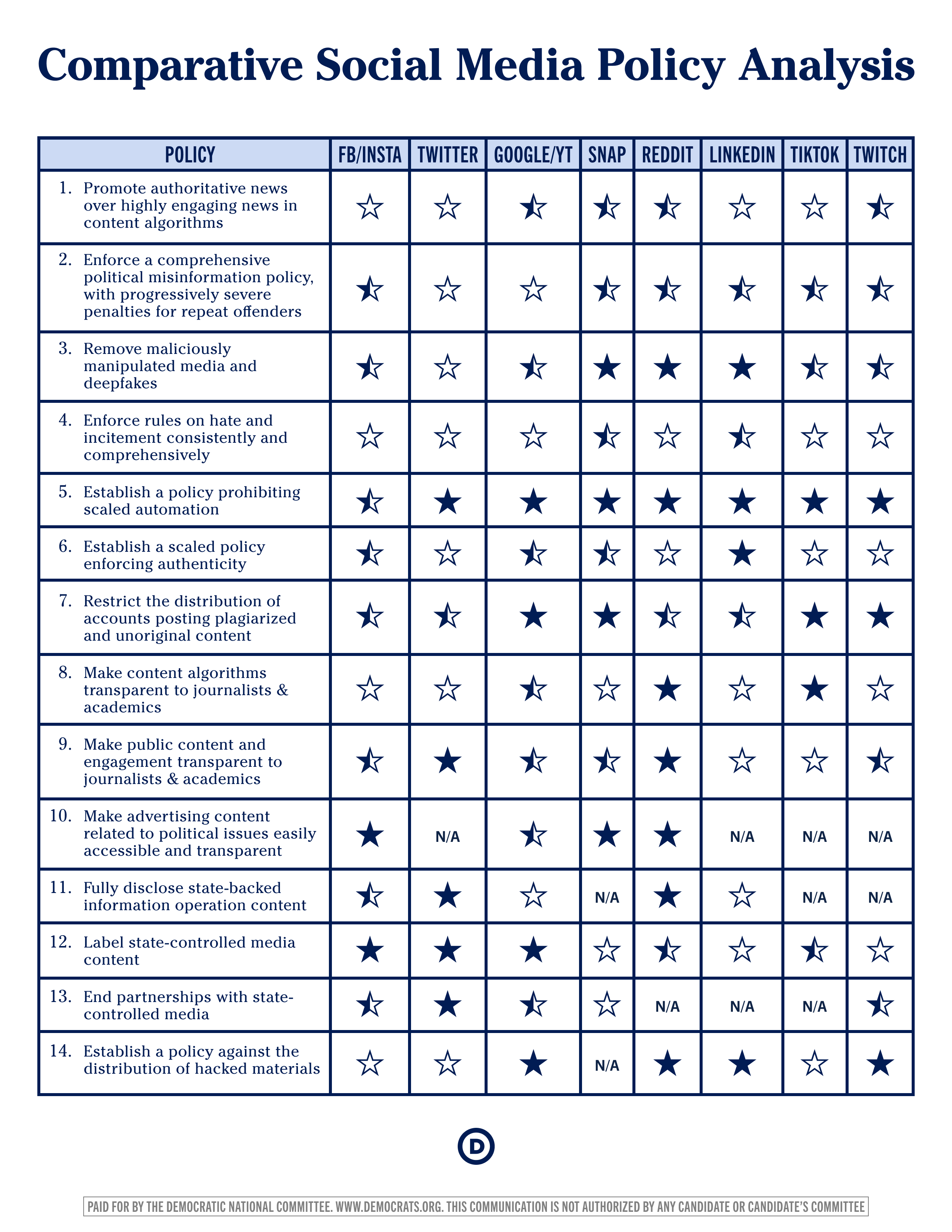

Comparative Social Media Policy Analysis

Social media has quickly become a major source of news for Americans. In a 2020 survey,1 over half of Americans reported getting news from social media on a regular basis—including 36% of Americans from Facebook, 23% from YouTube, and 15% from Twitter. Despite huge numbers of Americans turning to social media for news, major social media companies haven’t done enough to take responsibility for information quality on their sites. As quickly as these sites have become destinations for news seekers, they have just as quickly become large propagators of disinformation and propaganda.

The DNC is working with major social media companies to combat platform manipulation and train our campaigns on how best to secure their accounts and protect their brands against disinformation. While progress has been made since the 2016 elections, social media companies still have much to do to reduce the spread of disinformation and combat malicious activity. Social media companies are ultimately responsible for combating abuse and disinformation on their systems, but as an interested party, we’ve compiled this comparative policy analysis to present social media companies with additional potential solutions.

While this analysis compares major social media companies, we also call on all other online advertisers and publishers to undertake similar efforts to promote transparency and combat disinformation on their sites.

Explanation of Policy Determinations

1. Promote authoritative news over highly engaging news in content algorithms

Social media algorithms are largely responsible for determining what appears in our feeds and how prominently it appears. The decisions made by these algorithms are incredibly consequential, having the power to shape the beliefs and actions of billions of users around the world. Most social media algorithms are designed to maximize time spent on a site, which when applied to news tends to create a “race to the bottom of the brain stem”—boosting the most sensational, outrageous, and false content.

![]() Facebook’s News Feed, responsible for the vast majority of content consumed on Facebook, prioritizes user engagement as its primary signal for algorithmic boosts—which tends to amplify fear-mongering, outrage-bait, and false news content on the site. “ClickGap,” an initiative rolled out in 2019, and partnerships with independent fact-checkers have reduced the reach of some very low-quality webpages, but the effective application of rules and policies has been repeatedly subject to political intervention from Facebook’s government relations team. The company temporarily employed News Ecosystem Quality (NEQ) ratings to promote high-quality news in November of 2020, but has no public plans to permanently integrate the scores into News Feed rankings. Recent changes to News Feed have prioritized articles containing original reporting and those with transparent authorship, but did not actively prioritize authoritative sources of news over non-trustworthy ones. Facebook’s alternative News Tab uses human curation to promote authoritative news sources, but is little-used2.

Facebook’s News Feed, responsible for the vast majority of content consumed on Facebook, prioritizes user engagement as its primary signal for algorithmic boosts—which tends to amplify fear-mongering, outrage-bait, and false news content on the site. “ClickGap,” an initiative rolled out in 2019, and partnerships with independent fact-checkers have reduced the reach of some very low-quality webpages, but the effective application of rules and policies has been repeatedly subject to political intervention from Facebook’s government relations team. The company temporarily employed News Ecosystem Quality (NEQ) ratings to promote high-quality news in November of 2020, but has no public plans to permanently integrate the scores into News Feed rankings. Recent changes to News Feed have prioritized articles containing original reporting and those with transparent authorship, but did not actively prioritize authoritative sources of news over non-trustworthy ones. Facebook’s alternative News Tab uses human curation to promote authoritative news sources, but is little-used2.

![]() Twitter has employed anti-spam measures but has made little attempt to establish domain or account authority in algorithms.

Twitter has employed anti-spam measures but has made little attempt to establish domain or account authority in algorithms.

![]() YouTube has partnered with its sister company Google to introduce PageRank into its news search and recommendation algorithm, raising the search visibility of authoritative news sources. These signals have also been integrated into YouTube’s “Up Next” algorithm, which is responsible for ~70% of watch time on the site. Despite progress, the site regularly elevates disreputable sources that news rating organization NewsGuard finds “fail to adhere to several basic journalistic standards.”

YouTube has partnered with its sister company Google to introduce PageRank into its news search and recommendation algorithm, raising the search visibility of authoritative news sources. These signals have also been integrated into YouTube’s “Up Next” algorithm, which is responsible for ~70% of watch time on the site. Despite progress, the site regularly elevates disreputable sources that news rating organization NewsGuard finds “fail to adhere to several basic journalistic standards.”

![]() Twitter has employed anti-spam measures but has made little attempt to establish domain or account authority in algorithms, often boosting disinformation’s virality.

Twitter has employed anti-spam measures but has made little attempt to establish domain or account authority in algorithms, often boosting disinformation’s virality.

![]() Snap’s Discover tab is open to authorized publishers only. Media partners publishing to Discover are vetted by Snap’s team of journalists and required to fact-check their content for accuracy. Within Discover, Snap does not elevate authoritative news sources over tabloid and viral news & opinion sites.

Snap’s Discover tab is open to authorized publishers only. Media partners publishing to Discover are vetted by Snap’s team of journalists and required to fact-check their content for accuracy. Within Discover, Snap does not elevate authoritative news sources over tabloid and viral news & opinion sites.

![]() Reddit relies on users voting to rank content within feeds. While the company doesn’t directly promote authoritative news, the site’s downvoting functionality allows users to demote untrustworthy news sources—resulting in a higher quality news environment than exists on many other social media sites.

Reddit relies on users voting to rank content within feeds. While the company doesn’t directly promote authoritative news, the site’s downvoting functionality allows users to demote untrustworthy news sources—resulting in a higher quality news environment than exists on many other social media sites.

![]() LinkedIn’s editorial team highlights trusted sources in its “LinkedIn News” panel, but the company has made no attempt to establish domain or account authority in feed algorithms.

LinkedIn’s editorial team highlights trusted sources in its “LinkedIn News” panel, but the company has made no attempt to establish domain or account authority in feed algorithms.

![]() TikTok has made efforts to diversify recommendations and discourage algorithmic rabbit holes, but has made no effort to algorithmically promote authoritative sources of news on its app.

TikTok has made efforts to diversify recommendations and discourage algorithmic rabbit holes, but has made no effort to algorithmically promote authoritative sources of news on its app.

![]() Twitch uses human curation to highlight creators on its home page. Channel recommendation algorithms do not take source authoritativeness into account.

Twitch uses human curation to highlight creators on its home page. Channel recommendation algorithms do not take source authoritativeness into account.

2. Enforce a comprehensive political misinformation policy, with progressively severe penalties for repeat offenders

While social media companies have been reluctant to evaluate the truthfulness of content on their websites, it’s critical that these companies acknowledge misinformation when it appears. Establishing and enforcing progressively severe penalties for users that frequently publish misinformation is an important way to deter its publication and spread.

![]() Facebook has partnered with independent, IFCN-certified third-parties to fact-check content and has employed a remove/reduce/inform strategy to combat disinformation. Ad content that is determined to be false by one of Facebook’s third-party fact-checking partners is removed. Organic content determined to be false is covered with a disclaimer, accompanied by a fact-check, and its reach is reduced by content algorithms. Users attempting to post or share previously fact-checked content are prompted with the fact-check before they post, and users who shared the content before it was fact-checked receive a notification to that effect. Unfortunately, Facebook’s third-party fact-checking program has largely failed to scale to the size of the site’s misinformation problem, including allowing misinformers the ability to freely repost previously fact-checked misinformation. The fact-checking program has not been extended to WhatsApp, and has been kneecapped by the company’s Republican-led government relations team. Content produced by elected officials and Facebook-approved candidates are exempt from Facebook’s fact-checking policy—a loophole exploited by some of the most prolific sources of misinformation on the site.

Facebook has partnered with independent, IFCN-certified third-parties to fact-check content and has employed a remove/reduce/inform strategy to combat disinformation. Ad content that is determined to be false by one of Facebook’s third-party fact-checking partners is removed. Organic content determined to be false is covered with a disclaimer, accompanied by a fact-check, and its reach is reduced by content algorithms. Users attempting to post or share previously fact-checked content are prompted with the fact-check before they post, and users who shared the content before it was fact-checked receive a notification to that effect. Unfortunately, Facebook’s third-party fact-checking program has largely failed to scale to the size of the site’s misinformation problem, including allowing misinformers the ability to freely repost previously fact-checked misinformation. The fact-checking program has not been extended to WhatsApp, and has been kneecapped by the company’s Republican-led government relations team. Content produced by elected officials and Facebook-approved candidates are exempt from Facebook’s fact-checking policy—a loophole exploited by some of the most prolific sources of misinformation on the site.

![]() In 2020, Twitter expanded its civic integrity policy to cover misinformation that aims to undermine confidence in democratic elections and developed an escalating scale of penalties for repeat offenders, a policy the company enforced for a short time following the election. The company is developing a crowdsourced fact-checking program, “Birdwatch,” that would cover all types of misinformation but has not yet fully launched the program.

In 2020, Twitter expanded its civic integrity policy to cover misinformation that aims to undermine confidence in democratic elections and developed an escalating scale of penalties for repeat offenders, a policy the company enforced for a short time following the election. The company is developing a crowdsourced fact-checking program, “Birdwatch,” that would cover all types of misinformation but has not yet fully launched the program.

![]() YouTube introduced news panels for breaking news events and some conspiracy content in 2018, and employs fact-check panels in YouTube Search for some popular searches debunked by IFCN fact-checkers. The company has established a policy against “demonstrably false claims that could significantly undermine participation or trust in an electoral or democratic process” in advertising content, a standard many bad actors have been able to evade. In December 2020, YouTube belatedly developed a policy and strike system against misinformation aimed at undermining confidence in democratic elections, which the company only begain enforcing after the January 6 insurecction at the US Capitol. YouTube does not apply fact-checks to individual videos posted organically to its site.

YouTube introduced news panels for breaking news events and some conspiracy content in 2018, and employs fact-check panels in YouTube Search for some popular searches debunked by IFCN fact-checkers. The company has established a policy against “demonstrably false claims that could significantly undermine participation or trust in an electoral or democratic process” in advertising content, a standard many bad actors have been able to evade. In December 2020, YouTube belatedly developed a policy and strike system against misinformation aimed at undermining confidence in democratic elections, which the company only begain enforcing after the January 6 insurecction at the US Capitol. YouTube does not apply fact-checks to individual videos posted organically to its site.

![]() Snap uses an in-house team of fact-checkers to enforce rules against “content that is misleading, deceptive, impersonates any person or entity” in political ads, and requires publishers on its Discover product to fact-check their content for accuracy. Fact-checking rules apply to all publishers on the site, including politicians, but do not include scaled penalties for repeat misinformers.

Snap uses an in-house team of fact-checkers to enforce rules against “content that is misleading, deceptive, impersonates any person or entity” in political ads, and requires publishers on its Discover product to fact-check their content for accuracy. Fact-checking rules apply to all publishers on the site, including politicians, but do not include scaled penalties for repeat misinformers.

![]() Reddit prohibits deceptive, untrue, or misleading claims in political advertisements and misleading information about the voting process under its impersonation policy. Enforcement of misinformation rules for organic posts is inconsistent, as subreddits like /r/conspiracy regularly post political misinformation with significant reach.

Reddit prohibits deceptive, untrue, or misleading claims in political advertisements and misleading information about the voting process under its impersonation policy. Enforcement of misinformation rules for organic posts is inconsistent, as subreddits like /r/conspiracy regularly post political misinformation with significant reach.

![]() LinkedIn has partnered with third-party fact-checkers to prohibit political and civic misinformation on its site. The company does not penalize accounts that repeatedly publish misinformation.

LinkedIn has partnered with third-party fact-checkers to prohibit political and civic misinformation on its site. The company does not penalize accounts that repeatedly publish misinformation.

![]() TikTok has partnered with third party fact-checkers to prohibit videos containing misinformation about elections or other civic processes. The company downranks unsubstantiated claims that its fact-checkers can’t verify. The company does not penalize accounts that repeatedly publish misinformation.

TikTok has partnered with third party fact-checkers to prohibit videos containing misinformation about elections or other civic processes. The company downranks unsubstantiated claims that its fact-checkers can’t verify. The company does not penalize accounts that repeatedly publish misinformation.

![]() Twitch has partnered with anti-misinformation groups to remove serial misinformers under its Harmful Misinformation Actors policy. The company does not list non-civic political misinformation under the policy nor does it evaluate individual claims made in streams.

Twitch has partnered with anti-misinformation groups to remove serial misinformers under its Harmful Misinformation Actors policy. The company does not list non-civic political misinformation under the policy nor does it evaluate individual claims made in streams.

3. Remove maliciously edited media and deepfakes

Media manipulation and deepfake technology employed against political candidates can have the potential to seriously warp voter perceptions, especially given the trust social media users currently place in video. It’s critical that social media companies acknowledge the heightened threat of manipulated media and establish policies to prevent its spread.

![]() Facebook announced changes to its policy on manipulated media in January, banning deepfake videos produced using artificial intelligence or machine learning technology that include fabricated speech (except parody videos). The policy change does not cover other types of deepfakes or manipulated media, which, when not posted by an elected official or Facebook-approved political candidate, are subject to fact checking by Facebook’s third-party fact-checkers.

Facebook announced changes to its policy on manipulated media in January, banning deepfake videos produced using artificial intelligence or machine learning technology that include fabricated speech (except parody videos). The policy change does not cover other types of deepfakes or manipulated media, which, when not posted by an elected official or Facebook-approved political candidate, are subject to fact checking by Facebook’s third-party fact-checkers.

![]() In February of 2020, Twitter announced a policy to label manipulated media on the site. Under the policy, only manipulated media that is “likely to impact public safety or cause serious harm” will be removed.

In February of 2020, Twitter announced a policy to label manipulated media on the site. Under the policy, only manipulated media that is “likely to impact public safety or cause serious harm” will be removed.

![]() YouTube’s stated policy prohibits manipulated media that “misleads users and may pose a serious risk of egregious harm.” Enforcement of the policy, however, has been inconsistent.

YouTube’s stated policy prohibits manipulated media that “misleads users and may pose a serious risk of egregious harm.” Enforcement of the policy, however, has been inconsistent.

![]() Snap requires publishers on its Discover product to fact-check their content for accuracy. Snap enables user sharing only within users’ phone contacts lists and limits group sharing to 32 users at a time –making it difficult for maliciously manipulated media to spread on the app.

Snap requires publishers on its Discover product to fact-check their content for accuracy. Snap enables user sharing only within users’ phone contacts lists and limits group sharing to 32 users at a time –making it difficult for maliciously manipulated media to spread on the app.

![]() Reddit prohibits misleading deepfakes and other manipulated media under its impersonation policy.

Reddit prohibits misleading deepfakes and other manipulated media under its impersonation policy.

![]() LinkedIn prohibits deepfakes and other manipulated media intended to deceive.

LinkedIn prohibits deepfakes and other manipulated media intended to deceive.

![]() TikTok’s stated policy prohibits harmful synthetic and manipulated media under its misinformation policy. Enforcement of the policy, however, has been inconsistent.

TikTok’s stated policy prohibits harmful synthetic and manipulated media under its misinformation policy. Enforcement of the policy, however, has been inconsistent.

![]() Twitch does not prohibit manipulated media in its community guidelines, but manipulated media can be the basis for channel removal under the company’s Harmful Misinformation Actors.

Twitch does not prohibit manipulated media in its community guidelines, but manipulated media can be the basis for channel removal under the company’s Harmful Misinformation Actors.

4. Enforce rules on hate speech consistently and comprehensively

Hate speech, also known as group defamation or group libel, is employed online to intimidate, exclude, and silence opposing points of view. Hateful and dehumanizing language targeted at individuals or groups based on their identity is harmful and can lead to real-world violence. While all major social media companies have developed extensive policies prohibiting hate speech, enforcement of those rules has been inconsistent. Unsophisticated, often outsourced content moderation resources is one cause of this inconsistency, as moderators lack the cultural context and expertise to accurately adjudicate content.

For example, reports have suggested that social media companies treat white nationalist terrorism differently than Islamic terrorism. There are widespread complaints from users that social media companies rarely take action on reported abuse. Often, the targets of abuse face consequences, while the perpetrators go unpunished. Some users, especially people of color, women, and members of the LGBTQIA community, have expressed the feeling that the “reporting system is almost like it’s not there sometimes.” (These same users can often be penalized by social media companies for highlighting hate speech employed against them.)

![]() Facebook’s automated hate speech detection systems have steadily improved since they were introduced in 2017, and the company has made important policy improvements to remove white nationalism, militarized social movements, and Holocaust denial from its site. Unfortunately, many of these policies go poorly enforced, and hateful content — especially content demonizing immigrants and religious minorities — still goes unactioned. In 2016, the company quashed internal findings suggesting that Facebook was actively promoting extremist groups on the site, only to end all political group recommendations after the site enabled much of the organizing behind the January 6, 2021 Capitol insurrection. The company also repeatedly went back on its promise to enforce its Community Standards against politicians in 2020.

Facebook’s automated hate speech detection systems have steadily improved since they were introduced in 2017, and the company has made important policy improvements to remove white nationalism, militarized social movements, and Holocaust denial from its site. Unfortunately, many of these policies go poorly enforced, and hateful content — especially content demonizing immigrants and religious minorities — still goes unactioned. In 2016, the company quashed internal findings suggesting that Facebook was actively promoting extremist groups on the site, only to end all political group recommendations after the site enabled much of the organizing behind the January 6, 2021 Capitol insurrection. The company also repeatedly went back on its promise to enforce its Community Standards against politicians in 2020.

against politicians.

![]() Twitter broadened its hate speech policy in 2020 to include language that dehumanizes on the basis of age, disability, disease, race, or ethnicity, and has taken steps to remove hate group leaders from its site, but hate content still pervades much of Twitter’s discourse and has been directly linked to offline violence. Twitter’s “Who to Follow” recommendation engine also actively prompts users to follow prominent sources of online hate and harassment.

Twitter broadened its hate speech policy in 2020 to include language that dehumanizes on the basis of age, disability, disease, race, or ethnicity, and has taken steps to remove hate group leaders from its site, but hate content still pervades much of Twitter’s discourse and has been directly linked to offline violence. Twitter’s “Who to Follow” recommendation engine also actively prompts users to follow prominent sources of online hate and harassment.

![]() In 2020, YouTube took steps to remove channels run by hate group leaders and developed creator tools to reduce the prevalence of hate in video comment sections. Despite this progress, virulently racist and bigoted channels are still recommended by YouTube’s “Up Next” algorithms and monetized via YouTube’s Partner Program.

In 2020, YouTube took steps to remove channels run by hate group leaders and developed creator tools to reduce the prevalence of hate in video comment sections. Despite this progress, virulently racist and bigoted channels are still recommended by YouTube’s “Up Next” algorithms and monetized via YouTube’s Partner Program.

![]() Snap’s safety-by-design approach limits the spread of hate or harassing speech, but hate and bigotry has repeatedly spread widely on the site.

Snap’s safety-by-design approach limits the spread of hate or harassing speech, but hate and bigotry has repeatedly spread widely on the site.

![]() Reddit has made significant changes since a 2015 Southern Poverty Law Center article called the site home to “the most violently racist” content on the internet –introducing research into the impacts of hate speech and providing transparency into its reach on the site. Despite progress, Reddit users still report rampant racism on the site, publishing an open letter to the company’s CEO in June 2020 demanding the site take significant action. In response, Reddit announced new content policy and an updated enforcement plan for hate content.

Reddit has made significant changes since a 2015 Southern Poverty Law Center article called the site home to “the most violently racist” content on the internet –introducing research into the impacts of hate speech and providing transparency into its reach on the site. Despite progress, Reddit users still report rampant racism on the site, publishing an open letter to the company’s CEO in June 2020 demanding the site take significant action. In response, Reddit announced new content policy and an updated enforcement plan for hate content.

![]() As a professional networking site, LinkedIn has established professional behavior standards to limit hate and harassment on its site – with some success. Some women’s groups, however, still report experiencing sustained abuse on the app.

As a professional networking site, LinkedIn has established professional behavior standards to limit hate and harassment on its site – with some success. Some women’s groups, however, still report experiencing sustained abuse on the app.

![]() TikTok has been found to be a potentially radicalizing force, and race-, religion-, and gender-based harassment are common aspects of TikTok’s comment sections. After an outcry from Black creators over harassment and unexplained account suspensions, TikTok launched an incubator program to develop Black talent on its app. Despite these promises, creators of color still report systematic discrimination on the app.

TikTok has been found to be a potentially radicalizing force, and race-, religion-, and gender-based harassment are common aspects of TikTok’s comment sections. After an outcry from Black creators over harassment and unexplained account suspensions, TikTok launched an incubator program to develop Black talent on its app. Despite these promises, creators of color still report systematic discrimination on the app.

![]() Twitch considers a broad range of hateful conduct – including off-site behavior by streamers – to make enforcement decisions and has developed innovative approaches to chat moderation. Despite company efforts, Twitch has struggled to address abuse and “hate raid” brigading on the site – often targeting LGBTQ+ streamers and streamers of color in particular. Streamers from marginalized groups have also been targets of dangerous “swattings” by Twitch users.

Twitch considers a broad range of hateful conduct – including off-site behavior by streamers – to make enforcement decisions and has developed innovative approaches to chat moderation. Despite company efforts, Twitch has struggled to address abuse and “hate raid” brigading on the site – often targeting LGBTQ+ streamers and streamers of color in particular. Streamers from marginalized groups have also been targets of dangerous “swattings” by Twitch users.

5. Establish a policy against scaled automation

One way bad actors seek to artificially boost their influence online is through the use of automation. Using computer software to post to social media is not malicious in itself, but unchecked automation can allow small groups of people to artificially manipulate online discourse and drown out competing views.

![]() Facebook has anti-spam policies designed to thwart bad actors seeking to artificially boost their influence through high-volume posting (often via automation). The website does not have a policy preventing artificial amplification of content posted via its Pages product, which is used by some bad actors to artificially boost reach.

Facebook has anti-spam policies designed to thwart bad actors seeking to artificially boost their influence through high-volume posting (often via automation). The website does not have a policy preventing artificial amplification of content posted via its Pages product, which is used by some bad actors to artificially boost reach.

![]() Twitter employs its own automation designed to combat coordinated manipulation and spam.

Twitter employs its own automation designed to combat coordinated manipulation and spam.

![]() YouTube has scaled automation and video spam detection, thwarting efforts at coordinated manipulation.

YouTube has scaled automation and video spam detection, thwarting efforts at coordinated manipulation.

![]() Snap’s Discover tab is open to verified publishers only, whose content is manually produced.

Snap’s Discover tab is open to verified publishers only, whose content is manually produced.

![]() Reddit has employed anti-spam measures and prohibits vote manipulation.

Reddit has employed anti-spam measures and prohibits vote manipulation.

![]() LinkedIn has employed anti-spam measures to combat coordinated manipulation.

LinkedIn has employed anti-spam measures to combat coordinated manipulation.

![]() TikTok prohibits coordinated inauthentic behaviors to exert influence or sway public opinion.

TikTok prohibits coordinated inauthentic behaviors to exert influence or sway public opinion.

![]() Twitch has employed anti-spam measures to combat coordinated manipulation.

Twitch has employed anti-spam measures to combat coordinated manipulation.

6. Establish a scaled policy enforcing authenticity

Requiring social media users to be honest and transparent about who they are limits the ability of bad actors to manipulate online discourse. This is especially true of foreign bad actors, who are forced to create inauthentic or anonymous accounts to infiltrate American communities online. While there may be a place for anonymous political speech, account anonymity makes deception and community infiltration fairly easy for bad actors. Sites choosing to allow anonymous accounts should consider incentivizing transparency in content algorithms.

![]() Facebook has established authenticity as a requirement for user accounts and employed automation and human flagging to improve its fake account detection. The company’s authenticity policy does not apply to Facebook’s Pages (a substantial portion of content) or Instagram products, which have been exploited by a number of foreign actors, both politically and commercially motivated.

Facebook has established authenticity as a requirement for user accounts and employed automation and human flagging to improve its fake account detection. The company’s authenticity policy does not apply to Facebook’s Pages (a substantial portion of content) or Instagram products, which have been exploited by a number of foreign actors, both politically and commercially motivated.

![]() Twitter has policies on impersonation and misleading profile information, but does not require user accounts to represent authentic humans.

Twitter has policies on impersonation and misleading profile information, but does not require user accounts to represent authentic humans.

![]() YouTube allows pseudonymous publishing and commenting, and does not require accounts producing or interacting with video content to be authentic. Google Search and Google News considers anonymous/low transparency publishers low quality and downranks them in favor or more transparent publishers.

YouTube allows pseudonymous publishing and commenting, and does not require accounts producing or interacting with video content to be authentic. Google Search and Google News considers anonymous/low transparency publishers low quality and downranks them in favor or more transparent publishers.

![]() Snap’s “Stars” and “Discover” features are available to select, verified publishers only. Snap allows pseudonymous accounts via its “chat” and “story” function, but makes it difficult for such accounts to grow audiences.

Snap’s “Stars” and “Discover” features are available to select, verified publishers only. Snap allows pseudonymous accounts via its “chat” and “story” function, but makes it difficult for such accounts to grow audiences.

![]() Reddit encourages pseudonymous user accounts and does not require user authenticity.

Reddit encourages pseudonymous user accounts and does not require user authenticity.

![]() LinkedIn requires users to use their real names on the site.

LinkedIn requires users to use their real names on the site.

![]() TikTok has policies on impersonation and misleading profile information, but does not require user accounts to represent authentic humans.

TikTok has policies on impersonation and misleading profile information, but does not require user accounts to represent authentic humans.

![]() Twitch allows pseudonymous publishing and commenting, and does not require accounts producing or interacting with video content to be authentic.

Twitch allows pseudonymous publishing and commenting, and does not require accounts producing or interacting with video content to be authentic.

7. Restrict the distribution of accounts posting plagiarized and unoriginal content

Plagiarism and aggregation of content produced by others is another way foreign actors are able to infiltrate domestic online communities. Foreign bad actors often have a limited understanding of the communities they want to target, which means they have difficulty producing relevant original content. To build audiences online, they frequently resort to plagiarizing content created within the community.

For example, Facebook scammers in Macedonia have admitted in interviews to plagiarizing their stories from U.S. outlets. In a takedown of suspected Russian Internet Research Agency accounts on Instagram, a majority of accounts re-posted content originally produced and popularized by Americans. While copyright infringement laws do apply to social media companies, current company policies are failing to prevent foreign bad actors from infiltrating domestic communities through large-scale intellectual property theft.

![]() Facebook downranks domains with stolen content and prioritizes original news reporting, but it’s clear from top article and publisher lists that these policies and systems are not applied at the level necessary for real impact. Facebook has a policy against unoriginal content in Pages but has not publicly announced enforcement actions on those grounds. Originality has been incorporated into ranking algorithms on Instagram.

Facebook downranks domains with stolen content and prioritizes original news reporting, but it’s clear from top article and publisher lists that these policies and systems are not applied at the level necessary for real impact. Facebook has a policy against unoriginal content in Pages but has not publicly announced enforcement actions on those grounds. Originality has been incorporated into ranking algorithms on Instagram.

![]() Twitter has removed content farm accounts and identical content across automated networks, but does not encourage original content production.

Twitter has removed content farm accounts and identical content across automated networks, but does not encourage original content production.

![]() Google Search/News/YouTube considers plagiarism, copying, article spinning, and other forms of low-effort content production to be “low quality” and downranks it heavily in favor of publishers of original content.

Google Search/News/YouTube considers plagiarism, copying, article spinning, and other forms of low-effort content production to be “low quality” and downranks it heavily in favor of publishers of original content.

![]() Snap’s Discover tab is open to verified sources publishing original content only.

Snap’s Discover tab is open to verified sources publishing original content only.

![]() Reddit has introduced an “OC” tag for users to mark original content and relies on community moderators and users to demote duplicate content, with mixed success.

Reddit has introduced an “OC” tag for users to mark original content and relies on community moderators and users to demote duplicate content, with mixed success.

![]() TikTok doesn’t recommend duplicated content in its #ForYou feed.

TikTok doesn’t recommend duplicated content in its #ForYou feed.

![]() Twitch prohibits creators from stealing content and posting content from other sites.

Twitch prohibits creators from stealing content and posting content from other sites.

8. Make content recommendations transparent to journalists/academics

Social media algorithms are largely responsible for deciding what appears in our feeds and how prominently it appears. The decisions made by these algorithms are incredibly consequential, having the power to manipulate the beliefs and actions of billions of users around the world. Understanding how social media algorithms work are crucial to understanding what these companies value and what types of content are benefitting from powerful algorithmic boosts.

![]() Facebook has provided very little transparency into the inputs and weights that drive its “NewsFeed” algorithm’s ranking decisions, which are responsible for the vast majority of content consumed on the site. Facebook announced an algorithm change in January 2018 designed to reduce the role of publishers and increase “time well spent” person-to-person interactions on the site. Public analysis of the change, however, found that the algorithm actually increased the consumption of articles around divisive topics. Details of Facebook-announced algorithmic demotions related to fact-checking, original news reporting, and a recently announced push to demote political posts are all opaque.

Facebook has provided very little transparency into the inputs and weights that drive its “NewsFeed” algorithm’s ranking decisions, which are responsible for the vast majority of content consumed on the site. Facebook announced an algorithm change in January 2018 designed to reduce the role of publishers and increase “time well spent” person-to-person interactions on the site. Public analysis of the change, however, found that the algorithm actually increased the consumption of articles around divisive topics. Details of Facebook-announced algorithmic demotions related to fact-checking, original news reporting, and a recently announced push to demote political posts are all opaque.

![]() Twitter has provided little transparency into how its algorithms determine what appears in top search results, ranked tweets, “who to follow” and “in case you missed it” sections of its site. Major algorithm changes designed to reduce spam and harassment on the site were announced in June 2018, and Twitter has previously indicated that algorithm changes happen on a daily or weekly basis. Twitter also rolled out a feature in December 2018 allowing users to choose to see tweets from accounts they follow appear in chronological order –effectively disabling Twitter’s ranking algorithm.

Twitter has provided little transparency into how its algorithms determine what appears in top search results, ranked tweets, “who to follow” and “in case you missed it” sections of its site. Major algorithm changes designed to reduce spam and harassment on the site were announced in June 2018, and Twitter has previously indicated that algorithm changes happen on a daily or weekly basis. Twitter also rolled out a feature in December 2018 allowing users to choose to see tweets from accounts they follow appear in chronological order –effectively disabling Twitter’s ranking algorithm.

![]() Google’s Search and News ranking algorithms (integrated into YouTube search) have been thoroughly documented. YouTube has provided very little transparency into its content recommendations, however, which power 70% of content consumed on the site.

Google’s Search and News ranking algorithms (integrated into YouTube search) have been thoroughly documented. YouTube has provided very little transparency into its content recommendations, however, which power 70% of content consumed on the site.

![]() Snap has provided little transparency into how Discover ranks content for users nor how the company chooses publishers it contracts with.

Snap has provided little transparency into how Discover ranks content for users nor how the company chooses publishers it contracts with.

![]() Reddit’s ranking algorithms are partially open source, with remaining non-public aspects limited to efforts to prevent vote manipulation.

Reddit’s ranking algorithms are partially open source, with remaining non-public aspects limited to efforts to prevent vote manipulation.

![]() LinkedIn has provided little transparency into how it ranks content in feeds.

LinkedIn has provided little transparency into how it ranks content in feeds.

![]() TikTok has opened a transparency center to enable experts to analyze the code that drives its algorithm.

TikTok has opened a transparency center to enable experts to analyze the code that drives its algorithm.

![]() Twitch has provided little transparency into how it recommends channels.

Twitch has provided little transparency into how it recommends channels.

9. Make public content and engagement transparent to journalists/academics

Understanding the health of social media discourse requires access to data on that discourse. Social media companies have taken very different approaches to transparency on their sites.

![]() Facebook has made its CrowdTangle tool, which provides some transparency into user engagement, available to journalists and academic researchers. The company does not provide full transparency into behavior on its site nor has the CrowdTangle tool been made available publicly. Publicly available data on Instagram is extremely limited.

Facebook has made its CrowdTangle tool, which provides some transparency into user engagement, available to journalists and academic researchers. The company does not provide full transparency into behavior on its site nor has the CrowdTangle tool been made available publicly. Publicly available data on Instagram is extremely limited.

![]() Twitter’s open API allows content and engagement to be easily analyzed by independent researchers, journalists, and academics.

Twitter’s open API allows content and engagement to be easily analyzed by independent researchers, journalists, and academics.

![]() YouTube allows approved academic researchers to study content and engagement. The company has also developed a public API that allows for public analysis of video summary information and engagement. Video transcripts are not available via the public API.

YouTube allows approved academic researchers to study content and engagement. The company has also developed a public API that allows for public analysis of video summary information and engagement. Video transcripts are not available via the public API.

![]() All public content is available to users via the Snapchat app. Snap does not make Discover interaction data public.

All public content is available to users via the Snapchat app. Snap does not make Discover interaction data public.

![]() Reddit’s open API allows content and engagement to be easily analyzed by independent researchers, journalists, and academics.

Reddit’s open API allows content and engagement to be easily analyzed by independent researchers, journalists, and academics.

![]() LinkedIn has not made public content or engagement data available to researchers.

LinkedIn has not made public content or engagement data available to researchers.

![]() TikTok has not made public content or engagement data available to researchers.

TikTok has not made public content or engagement data available to researchers.

![]() Twitch has developed a public API that allows for public analysis of video summary information and engagement. Video transcripts are not available via the public API.

Twitch has developed a public API that allows for public analysis of video summary information and engagement. Video transcripts are not available via the public API.

10. Make advertising content related to political issues easily accessible and transparent

Political ads are not limited to just those placed by candidates. Political action committees and interest groups spend large sums of money advocating for issues in a way that affects political opinion and the electoral choices of voters. Public understanding of these ad campaigns is crucial to understanding who is trying to influence political opinion and how.

The issue of opacity in political ads is compounded when combined with precise, malicious microtargeting and disinformation. Current policies at some major social media companies allow highly targeted lies to be directed at key voting groups in a way that is hidden from public scrutiny.

![]() Facebook has taken a broad definition of political speech and included issue ads in its political ad transparency initiative.

Facebook has taken a broad definition of political speech and included issue ads in its political ad transparency initiative.

ⁿ/ₐ Twitter does not allow political ads.

![]() Google and YouTube have limited their ad transparency initiative to ads naming U.S. state-level candidates and officeholders, ballot measures, and ads that mention federal or state political parties. Political issue ads not directly tied to ballot measures and politically-charged ads from partisan propaganda outlets have been exempted from the ad library.

Google and YouTube have limited their ad transparency initiative to ads naming U.S. state-level candidates and officeholders, ballot measures, and ads that mention federal or state political parties. Political issue ads not directly tied to ballot measures and politically-charged ads from partisan propaganda outlets have been exempted from the ad library.

![]() Snap has taken a broad definition of political speech and included issue and campaign-related ads in its political ad library.

Snap has taken a broad definition of political speech and included issue and campaign-related ads in its political ad library.

![]() Reddit has taken a broad definition of political speech and included issue and campaign-related ads in its political ad library.

Reddit has taken a broad definition of political speech and included issue and campaign-related ads in its political ad library.

ⁿ/ₐ LinkedIn does not allow political ads.

ⁿ/ₐ TikTok does not allow political ads.

ⁿ/ₐ Twitch does not allow political ads.

11. Fully disclose state-backed information operation content

When state-sponsored information operations are detected, it’s crucial that social media companies be transparent about how their sites were weaponized. Fully releasing datasets allows the public and research community to understand the complete nature of the operation, its goals, methods, and size. Full transparency also allows independent researchers to further expound on company findings, sometimes uncovering media assets not initially detected.

![]() Twitter has disclosed full datasets of state-backed information operations.

Twitter has disclosed full datasets of state-backed information operations.

![]() Facebook provides sample content from state-backed information operations via third party analysis.

Facebook provides sample content from state-backed information operations via third party analysis.

![]() Google/YouTube provides only sample content from state-backed information operations.

Google/YouTube provides only sample content from state-backed information operations.

ⁿ/ₐ Snap’s safety-by-design approach has made it difficult for state-backed information operations to have success on its platform. Snap was not one of the apps used by the Russian Internet Research Agency to interfere in American elections in 2016, but has committed to transparency should such an operation be detected.

![]() Reddit has disclosed full datasets of state-backed information operations.

Reddit has disclosed full datasets of state-backed information operations.

ⁿ/ₐ There is no public evidence of state-backed information operations on TikTok.

ⁿ/ₐ There is no public evidence of state-backed information operations on TikTok.

12. Label state-controlled media content

State-controlled media combines the opinion-making power of news with the strategic backing of government. Separate from publicly funded, editorially independent outlets, state-controlled media tends to present news material in ways that are biased in favor of the controlling government (i.e. propaganda).

![]() Facebook has introduced labeling for state-controlled media.

Facebook has introduced labeling for state-controlled media.

![]() YouTube has introduced labeling on all state-controlled media on its site.

YouTube has introduced labeling on all state-controlled media on its site.

![]() Twitter has introduced labeling of content from state-controlled media.

Twitter has introduced labeling of content from state-controlled media.

![]() Snap has not introduced labeling of content from state-controlled media.

Snap has not introduced labeling of content from state-controlled media.

![]() Reddit has banned links to Russian state-controlled media but has not introduced labeling for content from other state-controlled media outlets.

Reddit has banned links to Russian state-controlled media but has not introduced labeling for content from other state-controlled media outlets.

![]() LinkedIn has not introduced labeling of content from state-controlled media.

LinkedIn has not introduced labeling of content from state-controlled media.

![]() TikTok has introduced labeling for some state-controlled media content.

TikTok has introduced labeling for some state-controlled media content.

![]() Twitch has banned Russian state media but has not introduced labeling for other state-controlled media content.

Twitch has banned Russian state media but has not introduced labeling for other state-controlled media content.

13. End partnerships with state-controlled media.

As outlined in policy #2, state-controlled media combines the opinion-making power of news with the strategic backing of government. Separate from publicly funded, editorially independent outlets, state-controlled media tends to present news material in ways that are biased towards the goal of the controlling government (i.e. propaganda). Allowing these outlets to directly place content in front of Americans via advertising and/or profit from content published on their sites enhances these outlets’ ability to propagandize and misinform the public.

![]() Facebook has ended its business relationships with Russian state-controlled media and announced it would temporarily ban all state-controlled media advertising in the US ahead of the November 2020 election.

Facebook has ended its business relationships with Russian state-controlled media and announced it would temporarily ban all state-controlled media advertising in the US ahead of the November 2020 election.

![]() Twitter has banned advertising from state-controlled media.

Twitter has banned advertising from state-controlled media.

![]() YouTube has removed Russian state-controlled media channels but allows other state-controlled media to advertise and participate in its advertising programs.

YouTube has removed Russian state-controlled media channels but allows other state-controlled media to advertise and participate in its advertising programs.

![]() Snap partners with state-controlled media in its “Discover” tab.

Snap partners with state-controlled media in its “Discover” tab.

ⁿ/ₐ To date, Reddit has not allowed state-controlled media to advertise on its site. Politics-related news advertisements are subject to the company’s political ad policy.

ⁿ/ₐ To date, LinkedIn has not partnered with state-controlled media.

ⁿ/ₐ To date, TikTok has not allowed state-controlled media to advertise on its app.

![]() Twitch has removed Russian state-controlled media channels but allows other state-controlled media to advertise and participate in its advertising programs.

Twitch has removed Russian state-controlled media channels but allows other state-controlled media to advertise and participate in its advertising programs.

14. Establish a policy against the distribution of hacked materials

One particularly damaging tactic used by nation-state actors to disinform voters are “hack and dump” operations, where stolen documents and selectively published, forged, and/or distorted. While much of the burden of responsibly handling hacked materials falls on the professional media, social media companies can play a role in restricting distribution of these materials.

![]() Facebook’s “newsworthiness” exception to its hacked materials policy renders it ineffective against political hack-and-dump operations.

Facebook’s “newsworthiness” exception to its hacked materials policy renders it ineffective against political hack-and-dump operations.

![]() Twitter’s hacked materials policy prohibits the the direct distribution of hacked materials by hackers, but does not prohibit the publication of hacked materials by other Twitter users.

Twitter’s hacked materials policy prohibits the the direct distribution of hacked materials by hackers, but does not prohibit the publication of hacked materials by other Twitter users.

![]() Google and YouTube have established a policy banning content that facilitates access to hacked materials and demonetizes pages hosting hacked content.

Google and YouTube have established a policy banning content that facilitates access to hacked materials and demonetizes pages hosting hacked content.

ⁿ/ₐ Snap’s semi-closed Discover product makes it near-impossible for hackers or their affiliates to publish hacked materials for large audiences directly to its app.

![]() Reddit’s rules prohibit the sharing of personal or confidential information.

Reddit’s rules prohibit the sharing of personal or confidential information.

![]() LinkedIn prohibits the sharing of personal or sensitive information.

LinkedIn prohibits the sharing of personal or sensitive information.

![]() TikTok does not prohibit the publication of hacked materials.

TikTok does not prohibit the publication of hacked materials.

![]() Twitch prohibits any unauthorized sharing of private information.

Twitch prohibits any unauthorized sharing of private information.

Online disinformation is a whole-of-society problem that requires a whole-of-society response. Please visit democrats.org/disinfo for our full list of counter disinformation recommendations.